L' équipe Interaction son musique mouvement (précédemment : Interactions musicales temps réel) mène des recherches et des développements sur les systèmes interactifs dédiés à la musique et au spectacle vivant. Nos travaux concernent toute la chaîne du processus interactif, comprenant la captation et l’analyse multimodale de gestes et sons d’instrumentistes, les outils de synchronisation et de gestion de l’interaction, ainsi que des techniques de synthèse et traitement sonore temps réel. Ces recherches et leurs développements informatiques associés sont généralement réalisés dans le cadre de projets interdisciplinaires, intégrant scientifiques, artistes, pédagogues, designers et trouvent des applications dans des projets de création artistique, de pédagogie musicale, apprentissage du mouvement, ou encore dans des domaines industriels de l’audionumérique.

Pour plus d'information:

Principales thématiques

Modélisation et analyse de sons et gestes

Ce thème regroupe les développements théoriques concernant l'analyse de flux sonores et gestuels ou plus généralement de morphologies temporelles multimodales. Ces recherches concernent diverses techniques d'analyse audio, l'étude du jeu d'instrumentistes ou de gestes dansés.

Technologies pour l'interaction multimodale

Ce thème concerne nos outils d'analyse et de reconnaissance multimodale de gestes et son, d'outils et de synchronisation (suivi de geste par exemple) et de visualisation.

Synthèse et traitement sonore interactif

Ce thème regroupe essentiellement des méthodes de synthèse et traitement sonore basées sur des sons enregistrés ou de large corpus sonores.

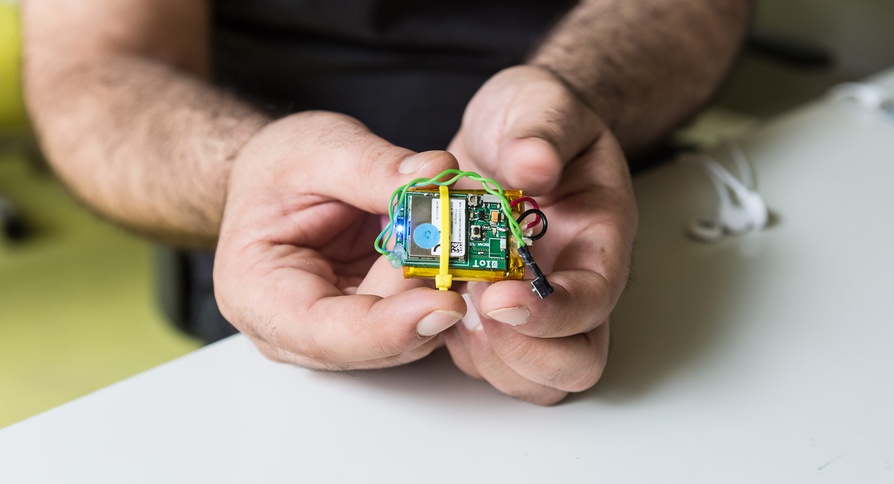

Systèmes de captation de gestes et instruments augmentés

Ce thème concerne nos développements d'interfaces gestuelles et d'instruments augmentés pour la musique et le spectacle vivant.

Domaines de compétence

Interactivité, informatique temps réel, interaction homme-machine, traitement de signal, captation du mouvement, modélisation du son et du geste, modélisation statistique et apprentissage automatique, analyse et synthèse sonore temps réel