"Créativité dans les réseaux neuronaux musicaux génératifs"

Les Mercredis de STMS et l'équipe Représentations musicales du Laboratoire STMS (Ircam - CNRS - Sorbonne Université - Ministère de la Culture) vous invitent à rencontrer Rodrigo Cádiz qui vous présentera "Créativité dans les réseaux neuronaux musicaux génératifs"

Tout public sera le bienvenu à l'Ircam mais il vous sera également possible de suivre cette intervention (en anglais) en direct en nous rejoignant sur https://www.youtube.com/watch?v=PfpLjOsurSE

La présentation sera en anglais.

Résumé :

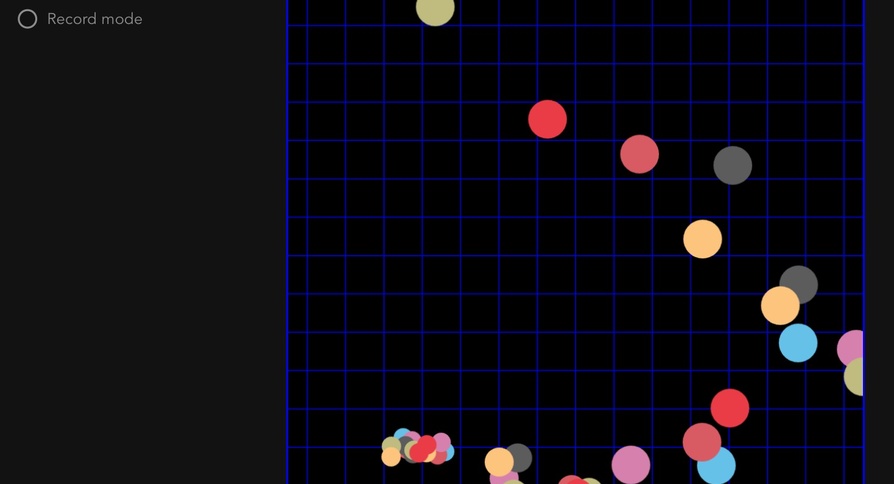

L'apprentissage profond, l'une des branches de l'intelligence artificielle qui connaît la croissance la plus rapide, est devenu l'un des domaines de recherche et de développement les plus pertinents de ces dernières années, en particulier depuis 2012, lorsqu'un réseau neuronal a surpassé les techniques de classification d'images les plus avancées de l'époque. Ce développement spectaculaire n'a pas été étranger au monde des arts, puisque les récentes avancées dans le domaine des réseaux génératifs ont rendu possible la création artificielle de contenus de haute qualité tels que des images, des films ou de la musique. Nous pensons que ces nouveaux modèles génératifs représentent un grand défi pour notre compréhension actuelle de la créativité informatique. Si un robot peut désormais créer de la musique qu'un expert ne peut distinguer de la musique composée par un humain, ou créer de nouvelles entités musicales qui n'étaient pas connues au moment de la formation, ou présenter des sauts conceptuels, cela signifie-t-il que la machine est alors créative ? Nous pensons que l'émergence de ces modèles génératifs indique clairement qu'il reste beaucoup à faire en matière de recherche dans ce domaine. Nous aimerions contribuer à ce débat en présentant deux études de cas qui nous sont propres : TimbreNet, un réseau d'auto-encodeurs variationnels entraîné à générer des accords musicaux basés sur l'audio, et StyleGAN Pianorolls, un réseau accusatoire génératif capable de créer de courts extraits musicaux, malgré le fait qu'il ait été entraîné avec des images et non des données musicales. Nous discutons et évaluons ces modèles génératifs par rapport à la créativité, nous montrons qu'ils sont en pratique capables d'apprendre des concepts musicaux qui ne sont pas évidents sur la base des données d'entraînement, et nous émettons l'hypothèse que ces modèles profonds, sur la base de notre compréhension actuelle de la créativité chez les robots et les machines, peuvent être considérés, en fait, comme créatifs.

Biographie :

Rodrigo F. Cádiz est compositeur, chercheur et ingénieur. Il a étudié la composition et l'ingénierie électrique à la Pontificia Universidad Católica de Chile (UC) à Santiago et a obtenu son doctorat en technologie musicale à la Northwestern University. Ses compositions, qui comprennent environ 60 œuvres, ont été présentées dans plusieurs lieux et festivals à travers le monde. Son catalogue comprend des œuvres pour instruments solistes, de la musique de chambre, des orchestres symphoniques et robotiques, de la musique visuelle, des ordinateurs et de nouvelles interfaces pour l'expression musicale. Il a reçu plusieurs prix de composition et bourses artistiques au Chili et aux États-Unis. Il est l'auteur d'une soixantaine de publications scientifiques dans des revues à comité de lecture et des conférences internationales. Ses domaines d'expertise comprennent la sonification, la synthèse sonore, le traitement audio numérique, l'informatique musicale, la composition, les nouvelles interfaces pour l'expression musicale et les applications musicales des systèmes complexes. Il a obtenu des fonds de recherche d'agences gouvernementales chiliennes, comme l'ANID et le CNCA. Il a reçu un prix Google Latin American Research Award (LARA) dans le domaine des graphes auditifs. En 2018, Rodrigo a été compositeur en résidence avec le Stanford Laptop orchestra (SLOrk) au Center for Computer-based Research in Music and Acoustics (CCRMA), et professeur invité Tinker au Center for Latin American Studies de l'Université de Stanford. En 2019, il a reçu le prix d'excellence pour la création artistique à l'UC. Il a présidé l'édition 2021 de la Conférence internationale sur la musique assistée par ordinateur. Il est actuellement professeur à l'Institut de musique et au département de génie électrique de l'université de Californie.