Un cadre neuronal de transformation de la voix pour la modification de la hauteur et de l'intensité

Freder ik Bous a réalisé sa thèse "Un cadre neuronal de transformation de la voix pour la modification de la hauteur et de l'intensité" au sein de l'équipe Analyse et synthèse des sons du Laboratoire STMS (Ircam - CNRS - Sorbonne Université - Ministère de la Culture). Ses recherches ont pu bénéficier d'un financement par une bourse EDITE et par le projet ANR "ARS". Son travail l'a conduit à collaborer en même temps avec l'artiste Judith Deschamps (en Résidence en recherche artistique à l'Ircam) pour recréer la voix de Farinelli.

ik Bous a réalisé sa thèse "Un cadre neuronal de transformation de la voix pour la modification de la hauteur et de l'intensité" au sein de l'équipe Analyse et synthèse des sons du Laboratoire STMS (Ircam - CNRS - Sorbonne Université - Ministère de la Culture). Ses recherches ont pu bénéficier d'un financement par une bourse EDITE et par le projet ANR "ARS". Son travail l'a conduit à collaborer en même temps avec l'artiste Judith Deschamps (en Résidence en recherche artistique à l'Ircam) pour recréer la voix de Farinelli.

Il vous convie à sa soutenance de thèse à l'Ircam le Jeudi 21 septembre à 14h30. La présentation sera en anglais et il vous sera aussi possible de la suivre en direct sur la chaîne YouTube de l'Ircam par le lien suivant : https://youtube.com/live/rADj7VUEKt0

Son jury sera composé de :

- Prof. Thierry Dutoit - Professeur des universités - Université de Mons (Belgique) - Rapporteur

- Prof. Yannis Stylianou - Professeur des universités - University of Crete (Grèce) - Rapporteur

- Dr. Christophe d'Alessandro - Directeur de recherche (HDR) - Institut Jean-Le-Rond-d'Alembert - Examinateur

- Dr. Jordi Bonada - Chargé de recherche - Université Pompeu Fabra (UPF) (Espagne) - Examinateur

- Dr. Nathalie Henrich - Directrice de recherche (HDR) - Univiversité Grenoble Alpes, UMR 5216 - Examinatrice

- Dr. Axel Roebel - Directeur de recherche (HDR) - Ircam, STMS Lab - Directeur de Thèse

Résumé :

La voix humaine est une source de fascination et un objet de recherche depuis plus de 100 ans, et de nombreuses technologies de traitement de la voix ont été développées. Dans cette thèse, nous nous intéressons aux vocodeurs, qui sont des méthodes fournissant des représentations paramétriques des signaux vocaux, et qui peuvent être utilisés pour la transformation de la voix. Des études antérieures ont démontré les limites importantes des approches basées sur des modèles de signaux explicites : pour des transformations vocales réalistes, les dépendances entre les différentes propriétés de la voix doivent être modélisées avec précision, mais malheureusement, aucun des modèles proposés jusqu'à présent n'a été suffisamment affiné pour exprimer correctement ces dépendances.

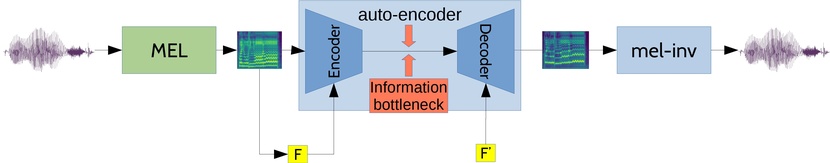

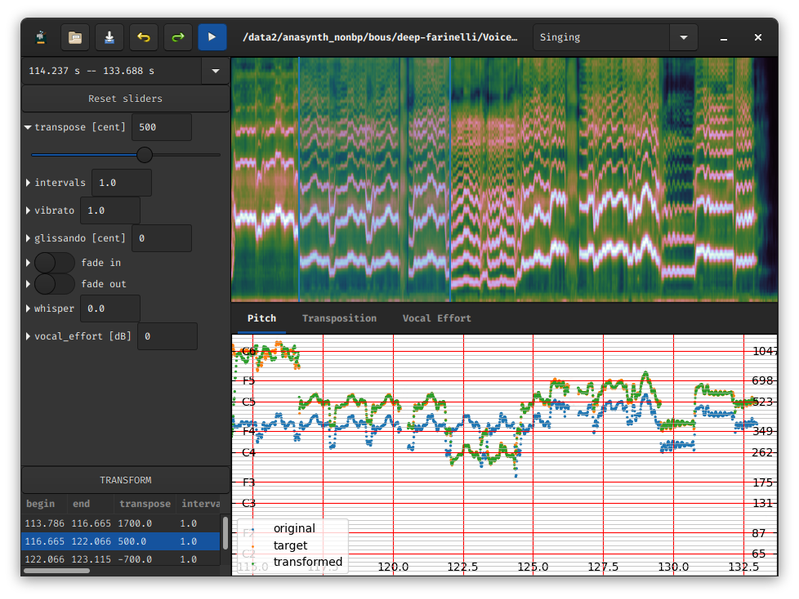

Récemment, les réseaux neuronaux profonds ont fait preuve d'un succès impressionnant dans l'extraction de dépendances de paramètres à partir de données et cette thèse vise à créer un système de transformation de la voix en utilisant les réseaux neuronaux profonds. Ce cadre fonctionne en deux étapes : tout d'abord, un vocodeur neuronal établit une correspondance inversible entre les signaux vocaux bruts et une représentation du mel-spectrogramme. Ensuite, un auto-encodeur établit une correspondance inversible entre le spectrogramme mél et la représentation vocale utilisée pour la transformation de la voix. L'auto-encodeur a pour tâche de créer ce que l'on appelle le code résiduel, en suivant deux objectifs. Premièrement, avec le paramètre de contrôle, le code résiduel doit permettre de recréer le spectrogramme mél original. Deuxièmement, le code résiduel doit être indépendant (démêlé) du paramètre de contrôle. Si ces objectifs sont atteints, il sera possible de créer des signaux vocaux cohérents à partir du paramètre cible potentiellement manipulé et du code résiduel.

Dans la première partie de la thèse, nous discutons les différentes approches permettant d’établir un vocodeur neuronal et les avantages de l'utilisation du mel-spectrogramme par rapport aux espaces paramétriques traditionnels. Dans la deuxième partie, nous présentons l'auto-encodeur proposé qui utilise un goulot d'étranglement d'information (information-bottleneck) pour réaliser le démêlage. Nous démontrons des résultats expérimentaux concernant deux paramètres de contrôle : la fréquence fondamentale et le niveau de la voix. La transformation de la fréquence fondamentale est une tâche souvent étudiée qui nous permet de comparer notre approche aux techniques existantes et d'étudier comment l'auto-encodeur modélise la dépendance à d'autres propriétés pour une tâche bien connue. Pour le niveau vocal, nous sommes confrontés au problème de la rareté des annotations. Par conséquent, nous proposons d'abord une nouvelle technique d'estimation du niveau de la voix dans de grandes bases de données vocales, puis nous utilisons les annotations du niveau de la voix pour entraîner un auto-encodeur à goulot d'étranglement qui permet de modifier le niveau de la voix.