Conception de nouvelles échelles de la création musicale à l'aide de l'apprentissage statistique hiérarchique

Théis Bazin soutiendra sa thèse CIFRE intitulée « Conception de nouvelles échelles de la création musicale à l'aide de l'apprentissage statistique hiérarchique ». Cette thèse a été réalisée sous la direction académique de Dr. Mikhail MALT au sein de l’équipe Représentations Musicales du laboratoire STMS (Ircam-CNRS-Sorbonne Université-Ministère de la Culture) et la direction industrielle de Dr. Gaëtan Hadjeres (SonyAI) au sein du laboratoire Sony CSL Paris. Elle a également bénéficié du co-encadrement de Dr. Philippe Esling. Ce travail a reçu le soutien de l’ANRT au titre de la bourse CIFRE n° 2019.009.

La soutenance aura lieu à l'Ircam. Vous pourrez aussi la suivre en direct sur la chaîne Youtube de l'Ircam: https://youtube.com/live/RAryXo2IS-4

Jury

Pr. Wendy MACKAY – Rapportrice – Directrice de recherche, INRIA-Saclay, ex-situ research group

Pr. Geoffroy PEETERS – Rapporteur – Professeur, Image-Data-Signal (IDS) department, LTCI, Telecom Paris, Institut Polytechnique de Paris

Pr. Cheng-Zhi Anna HUANG – Examinatrice – Professeure associée, MILA, Université de Montréal – Chercheuse, Google Magenta – Chaire en IA Canada-CIFAR

Dr. Jean BRESSON – Examinateur – Directeur de recherche, RepMus, STMS, IRCAM, Sorbonne Université, CNRS – Team Product Owner, Ableton

Dr. Mikhail MALT – Directeur de thèse – Chargé de recherche RepMus, STMS, IRCAM, Sorbonne Université, CNRS

Dr. Gaëtan HADJERES – Co-encadrant de thèse – Senior Research Scientist, SonyAI

Résumé de la thèse

Résumé de la thèse

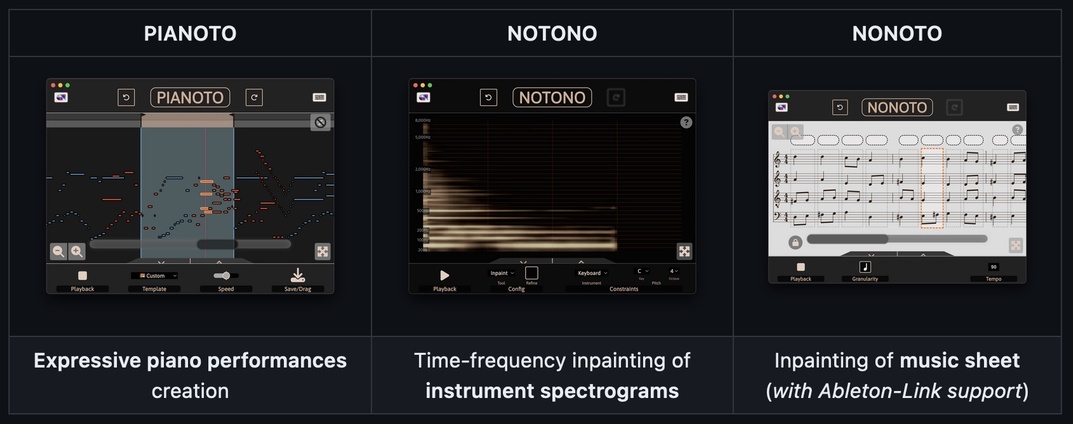

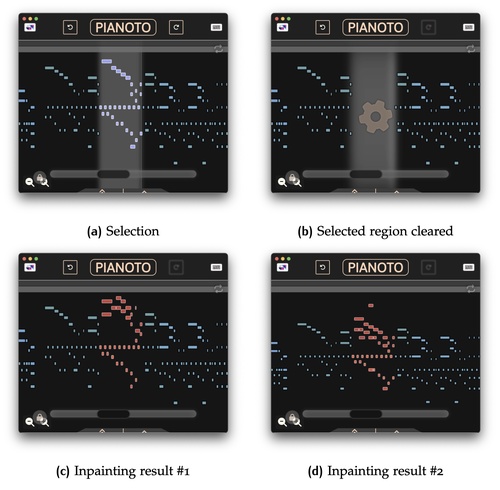

La création musicale moderne se déploie à de nombreuses échelles de temps différentes : de la vibration d'une corde ou la résonance d'un instrument électronique à l'échelle de la milliseconde en passant par les quelques secondes typiques d'une note d'instrument, jusqu'aux dizaines de minutes d'opéras ou de DJ sets. L'entremêlement de ces multiples échelles a mené au développement de nombreux outils techniques et théoriques pour rendre efficace cette entreprise de manipulation du temps. Ces abstractions, telles les gammes, les notations rythmiques ou encore les modèles courants de synthèse audio, infusent largement les outils actuels -- logiciels et matériels -- de création musicale. Pourtant, ces abstractions, qui ont émergé pour la plupart au cours du 20ème siècle en Occident sur la base de théories musicales classiques de la musique écrite, ne sont pas dénuées d'a priori culturels. Elles reflètent des principes déterminés visant à gommer certains aspects de la musique (par exemple, micro-déviations par rapport à un temps métronomique ou micro-déviations de fréquence par rapport à une hauteur idéalisée), dont le haut degré de variabilité physique les rend typiquement peu commodes pour l'écriture musicale. Ces compromis, qui s'avèrent pertinents lorsque la musique écrite est destinée à l'interprétation par des musicien-ne-s, à même de réintroduire variations et richesse physique et musicale, se révèlent cependant limitants dans le cadre de la création musicale assistée par ordinateur, restituant froidement ces abstractions, où ils tendent à restreindre la diversité des musiques qu'il est possible de produire. À travers la présentation de plusieurs interfaces typiques de la création musicale, je montre qu'un facteur essentiel est l'échelle des interactions humain-machine proposées par ces abstractions. À leur plus grand niveau de flexibilité, tels les représentations audio ou les piano-rolls sur un temps non quantifié, elles se révèlent difficiles à manipuler, car elles requièrent un haut degré de précision, particulièrement inadapté aux terminaux mobiles et tactiles modernes. A contrario, dans de nombreuses abstractions communément employées, comme les partitions ou les séquenceurs, à temps discrétisé, elles se révèlent contraignantes pour la création de musiques culturellement diverses. Dans cette thèse, je soutiens que l'intelligence artificielle, par la capacité qu'elle offre à construire des représentations haut-niveau d'objets complexes donnés, permet de construire de nouvelles échelles de la création musicale, pensées pour l'interaction, et de proposer ainsi des approches radicalement neuves de la création musicale. Je présente et illustre cette idée à travers le design et le développement de trois prototypes web de création musicales assistés par IA, dont un basé sur un modèle neuronal nouveau pour l'inpainting de sons d'instruments de musique également conçu dans le cadre de cette thèse. Ces représentations haut-niveau - pour les partitions, les piano-rolls et les spectrogrammes - se déploient à une échelle temps-fréquence plus grossière que les données d'origine, mais mieux adaptée à l'interaction. En permettant d'effectuer des transformations localisées sur cette représentation mais en capturant également, par la modélisation statistique, des spécificités esthétiques et micro-variations des données musicales d'entraînement, ces outils permettent d'obtenir aisément et de façon contrôlable des résultats musicalement riches. À travers l'évaluation en conditions réelles par plusieurs artistes de ces trois prototypes, je montre que ces nouvelles échelles de création interactive sont utiles autant pour les expert-e-s que pour les novices. Grâce à l'assistance de l'IA sur des aspects techniques nécessitant normalement précision et expertise, elles se prêtent de plus à une utilisation sur écrans tactiles et mobiles.